Method

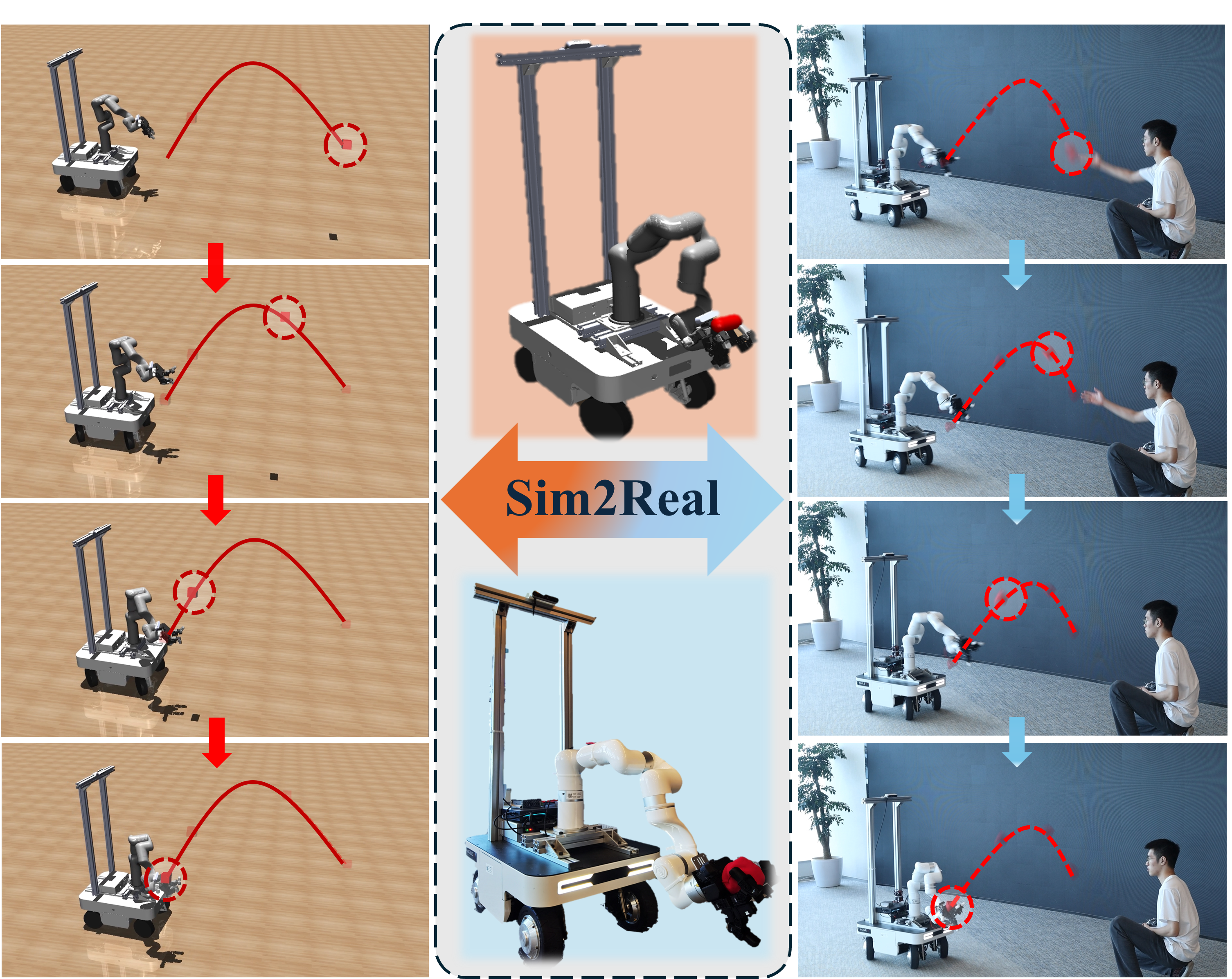

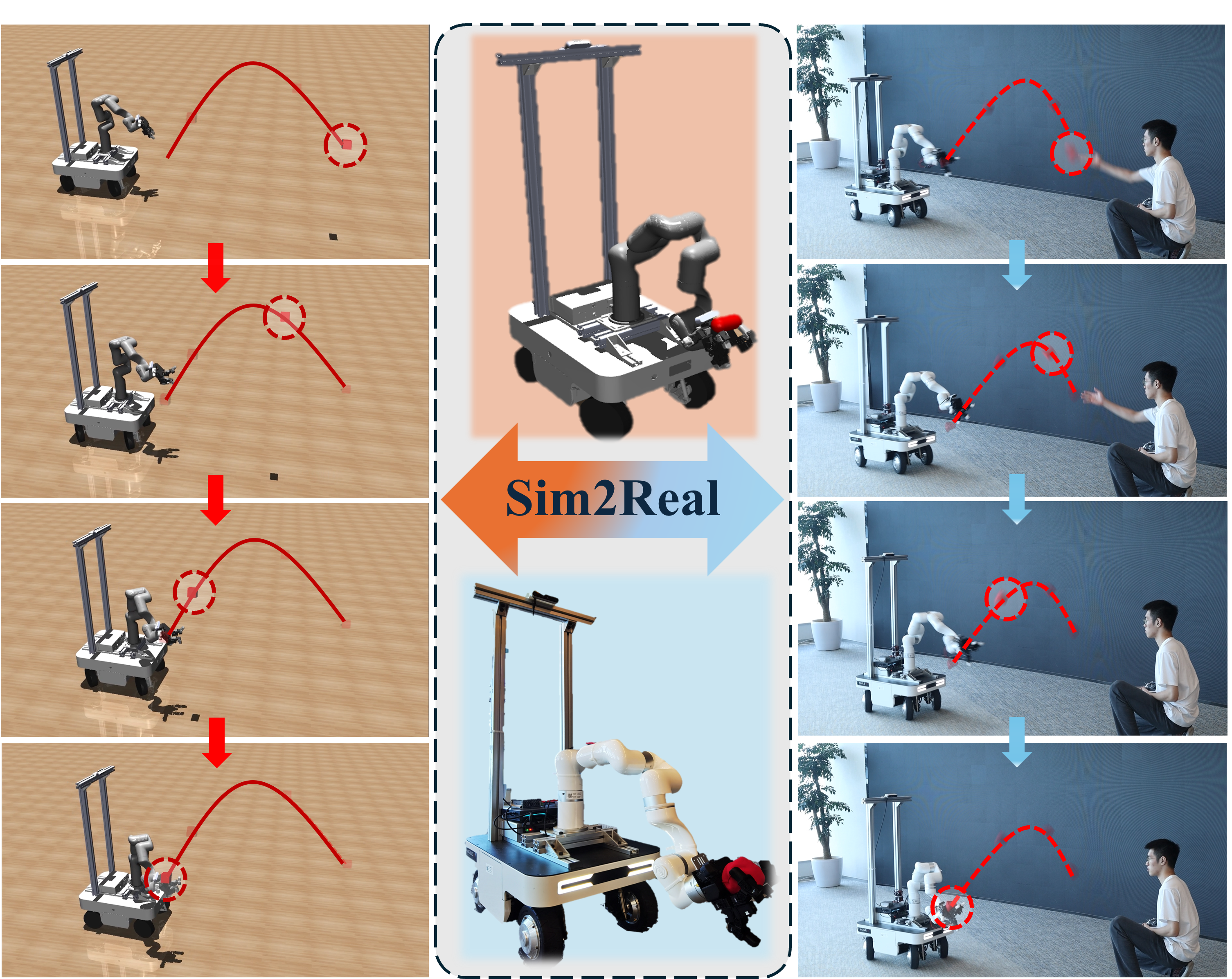

Sim2Real Illustration of Catching Motions

Catch It! is a method using reinforcement learning (RL) to learn a whole-body control policy for catching objects in simulation, with Sim2Real transfer to real robots.

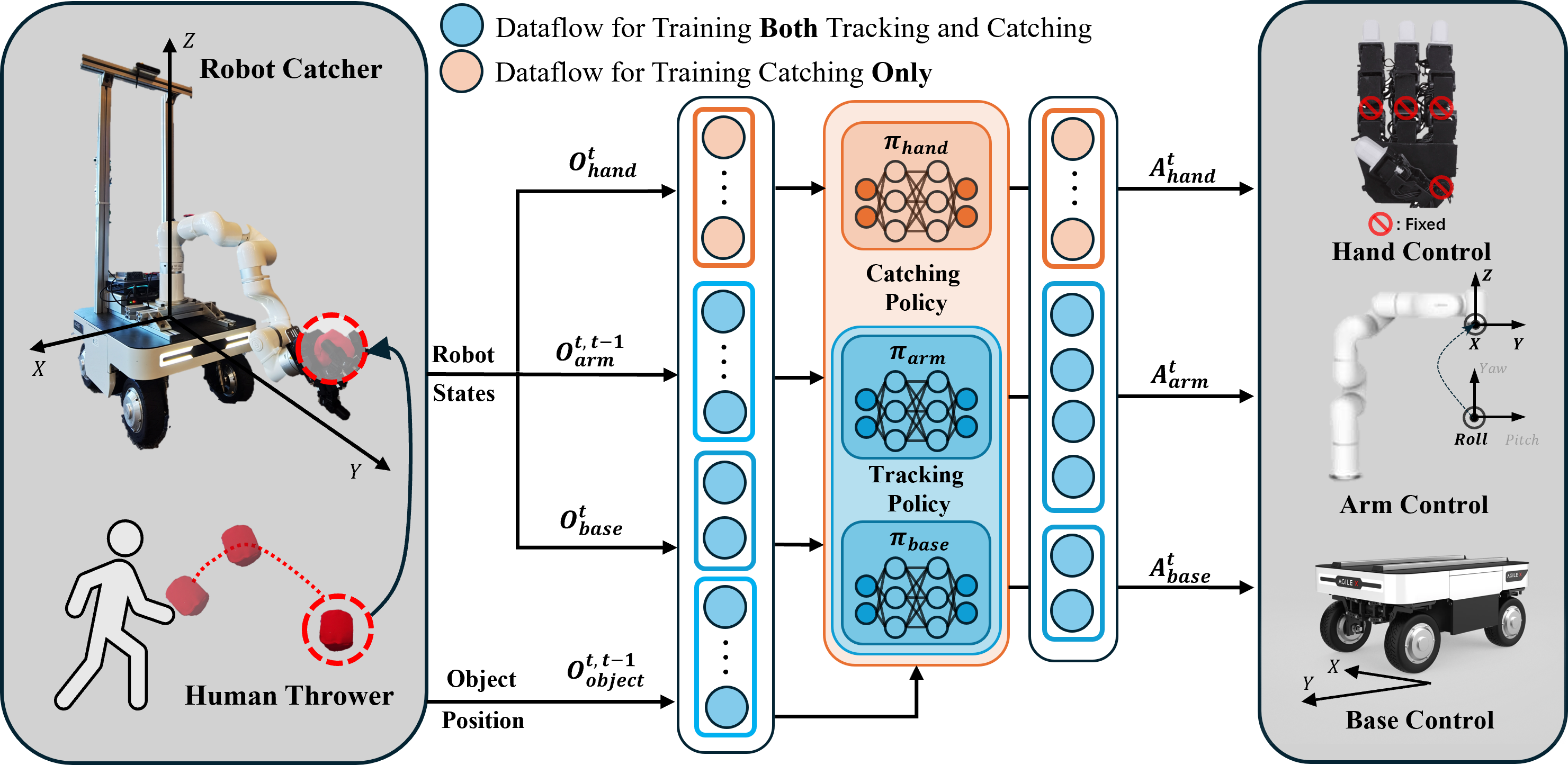

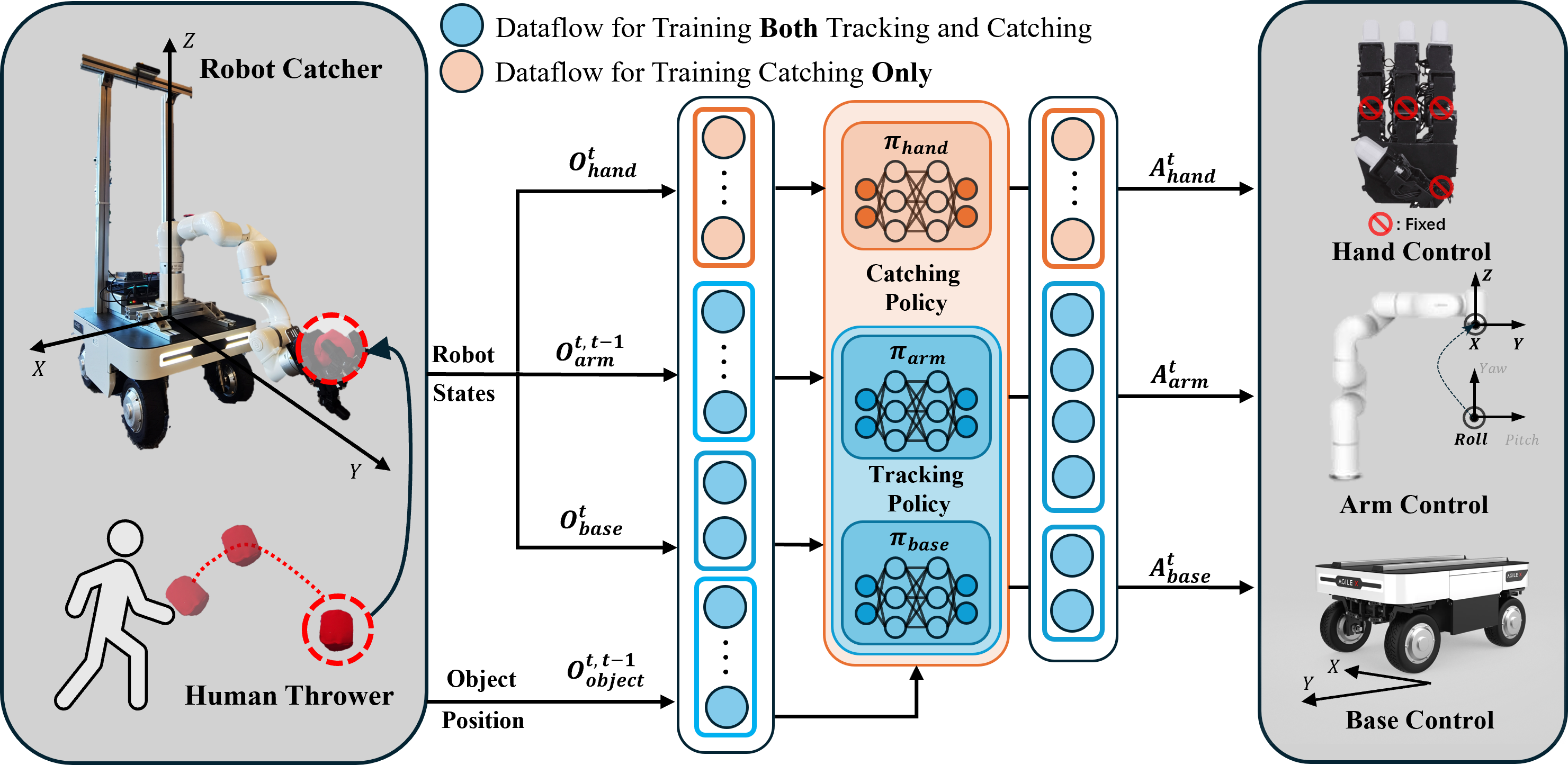

Two-Stage Training Framework in Simulator (Better Efficiency)

Stage 1: Training for Tracking

The beginning of training

We first train the base and arm control policies in a tracking task parallelly. During the initial phase of training, it is evident that the robot is unable to follow the object's trajectory.

After training for a while

After a period of training, the robot has learned to track the trajectory of the object and make contact with it using the palm.

Stage 2: Training for Catching

The beginning of training

We continue to train the robot's catching policy. At the early stage of training, the robot tracks the object easily but fails to grasp it.

After training for catching

Through the two-stage training approach, the robot ultimately achieves the ability to track and catch objects.

Generalize to unseen objects

To validate the policy's generalization in simulation, we test with 5 unseen object shapes. And our trained policy demonstrates robust generalization to unseen objects.

Catch It! is a method using reinforcement learning (RL) to learn a whole-body control policy for catching objects in simulation, with Sim2Real transfer to real robots.

The beginning of training

We first train the base and arm control policies in a tracking task parallelly. During the initial phase of training, it is evident that the robot is unable to follow the object's trajectory.

After training for a while

After a period of training, the robot has learned to track the trajectory of the object and make contact with it using the palm.

The beginning of training

We continue to train the robot's catching policy. At the early stage of training, the robot tracks the object easily but fails to grasp it.

After training for catching

Through the two-stage training approach, the robot ultimately achieves the ability to track and catch objects.

To validate the policy's generalization in simulation, we test with 5 unseen object shapes. And our trained policy demonstrates robust generalization to unseen objects.